Building Blocks¶

We introduce the building blocks of an Iter8 experiment below.

Apps and Versions¶

Iter8 defines an app broadly as any entity that can be deployed (run), versioned, and for which metrics can be collected.

Examples

- A stateless K8s application whose versions correspond to

deployments. - A stateful K8s application whose versions correspond to

statefulsets. - A Knative application whose versions correspond to

revisions. - A KFServing inference service, whose versions correspond to model

revisions. - A distributed application whose versions correspond to Helm

releases.

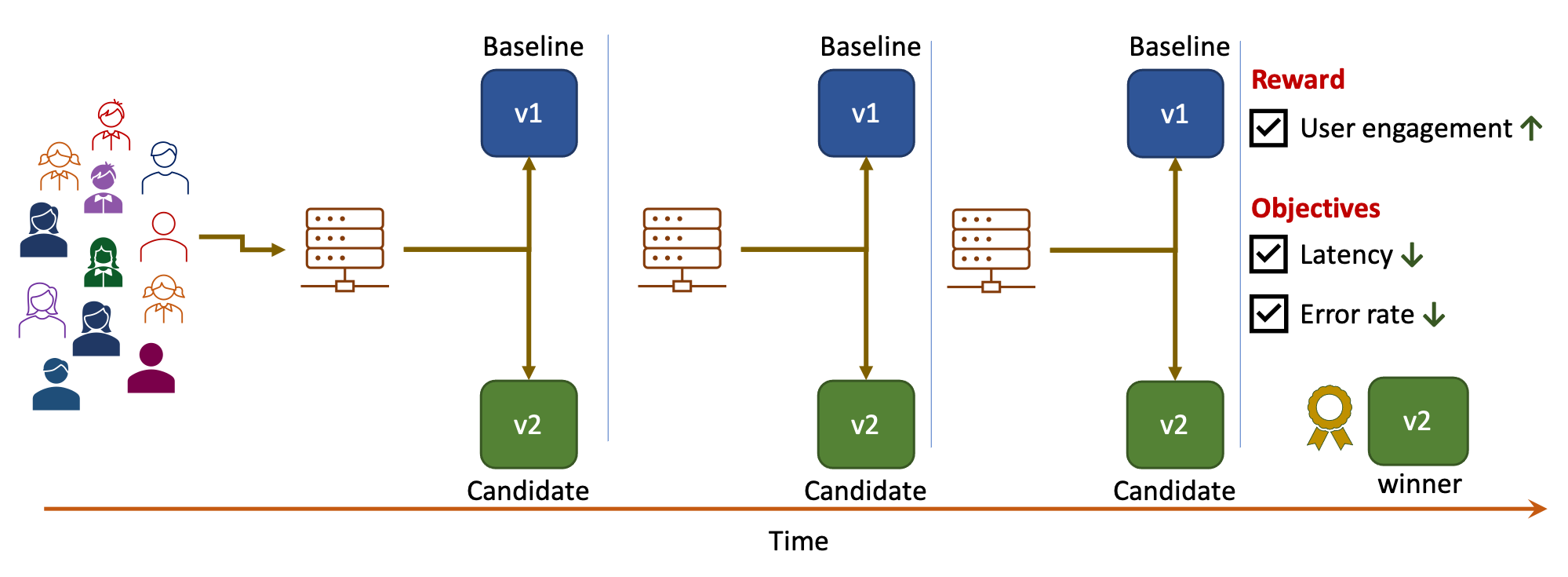

Objectives (SLOs)¶

Objectives correspond to service-level objectives or SLOs. In Iter8 experiments, objectives are specified as metrics along with acceptable limits on their values. Iter8 will report how versions are performing with respect to these metrics and whether or not they satisfy the objectives.

Examples

- The 99th-percentile tail latency of the application should be under 50 msec.

- The precision of the ML model version should be over 92%.

- The (average) number of GPU cores consumed by a model should be under 5.0

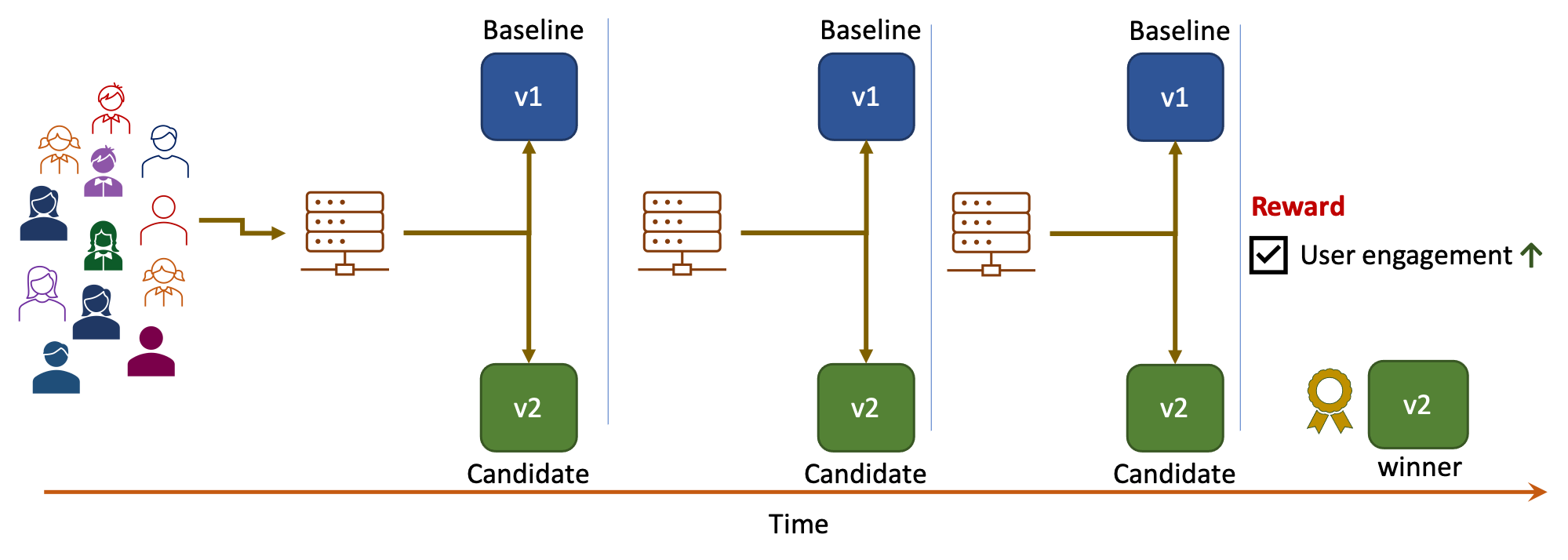

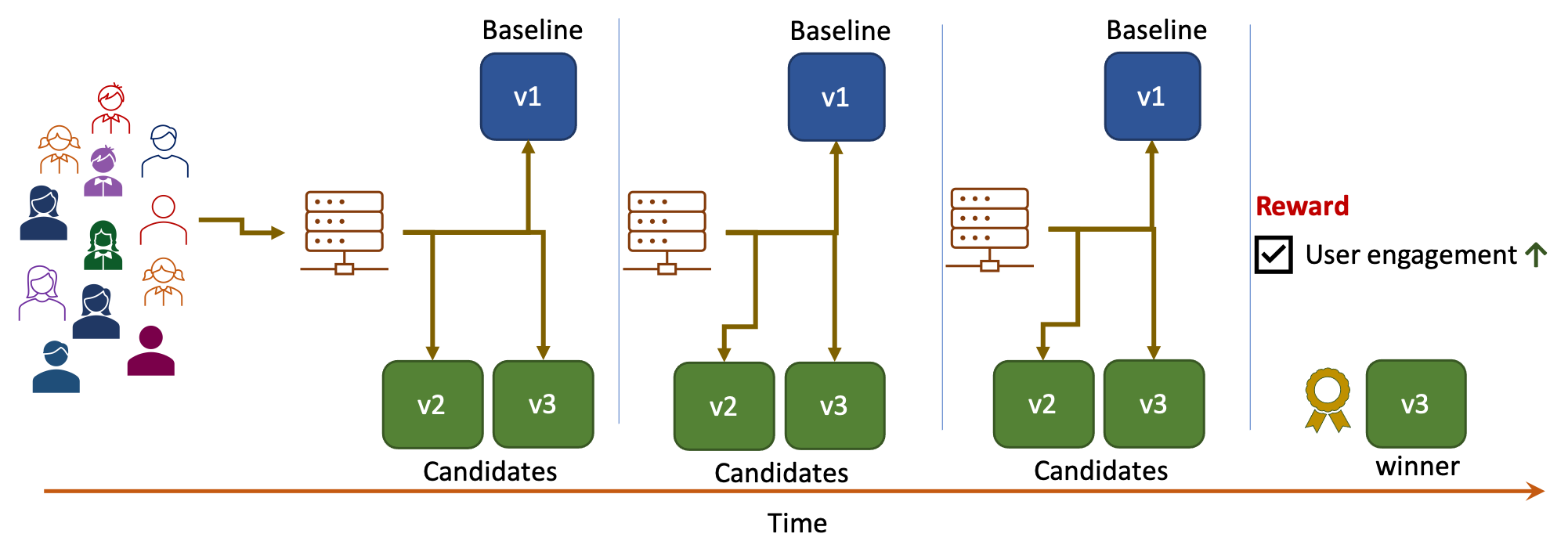

Reward¶

Reward typically corresponds to a business metric which you wish to optimize during an A/B testing experiment. In Iter8 experiments, reward is specified as a metric along with a preferred direction, which could be high or low.

Examples

- User-engagement

- Conversion rate

- Click-through rate

- Revenue

- Precision, recall, or accuracy (for ML models)

- Number of GPU cores consumed by an ML model

All but the last example above have a preferred direction high; the last example is that of a reward with preferred direction low.

Baseline and candidate versions¶

Every Iter8 experiment involves a baseline version and may also involve zero, one or more candidate versions. Experiments often involve two versions, baseline and a candidate, with the baseline version corresponding to the stable version of your app, and the candidate version corresponds to a canary.

Testing strategy¶

Testing strategy determines how the winning version (winner) in an experiment is identified.

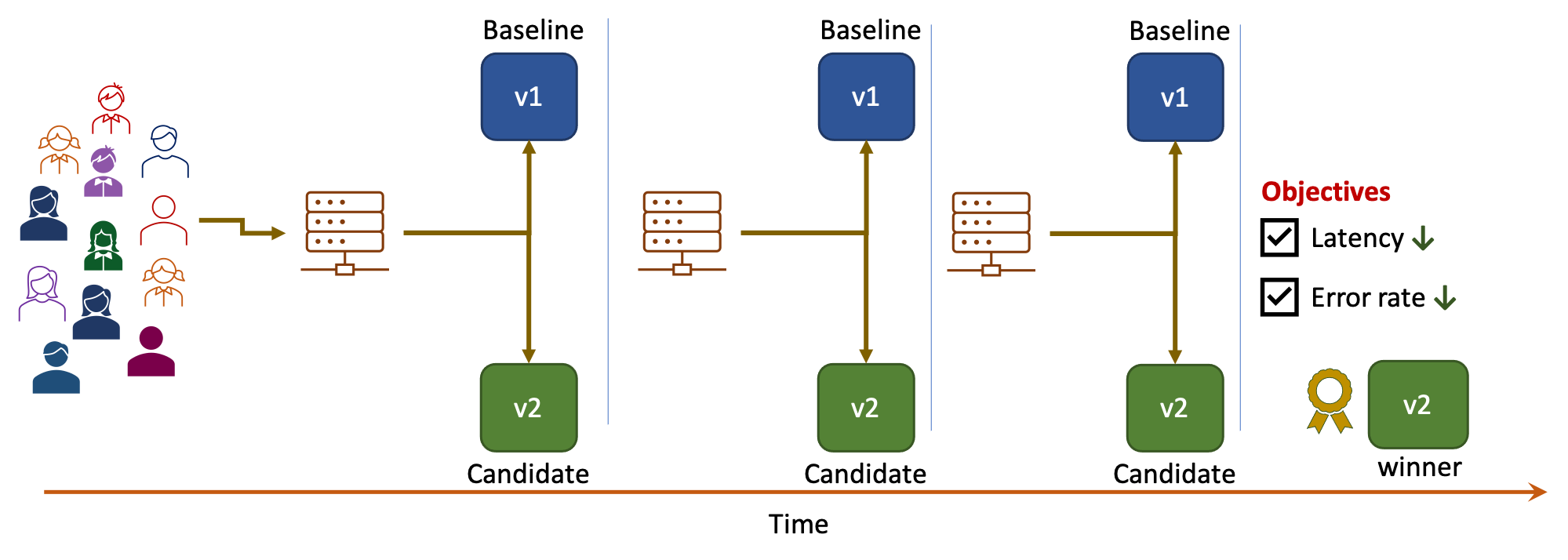

SLO validation¶

SLO validation experiments may involve a single version or two versions.

SLO validation experiment with baseline version and no candidate (conformance testing): If baseline satisfies the objectives, it is the winner. Otherwise, there is no winner.

SLO validation experiment with baseline and candidate versions: If candidate satisfies the objectives, it is the winner. Else, if baseline satisfies the objectives, it is the winner. Else, there is no winner.

A/B testing¶

A/B testing experiments involve a baseline version, a candidate version, and a reward metric. The version which performs best in terms of the reward metric is the winner.

A/B/n testing¶

A/B/n testing experiments involve a baseline version, two or more candidate versions, and a reward metric. The version which performs best in terms of the reward metric is the winner.

Hybrid (A/B + SLOs) testing¶

Hybrid (A/B + SLOs) testing experiments combine A/B or A/B/n testing on the one hand with SLO validation on the other. Among the versions that satisfy objectives, the version which performs best in terms of the reward metric is the winner. If no version satisfies objectives, then there is no winner.

Rollout strategy¶

Rollout strategy defines how traffic is split between versions during the experiment.

Iter8 makes it easy for you to take total advantage of all the traffic engineering features available in your K8s environment (i.e., supported by the ingress or service mesh technology available in your K8s cluster).

A few common deployment strategies used in Iter8 experiments are described below. In the following description, v1 and v2 refer to the current and new versions of the application respectively.

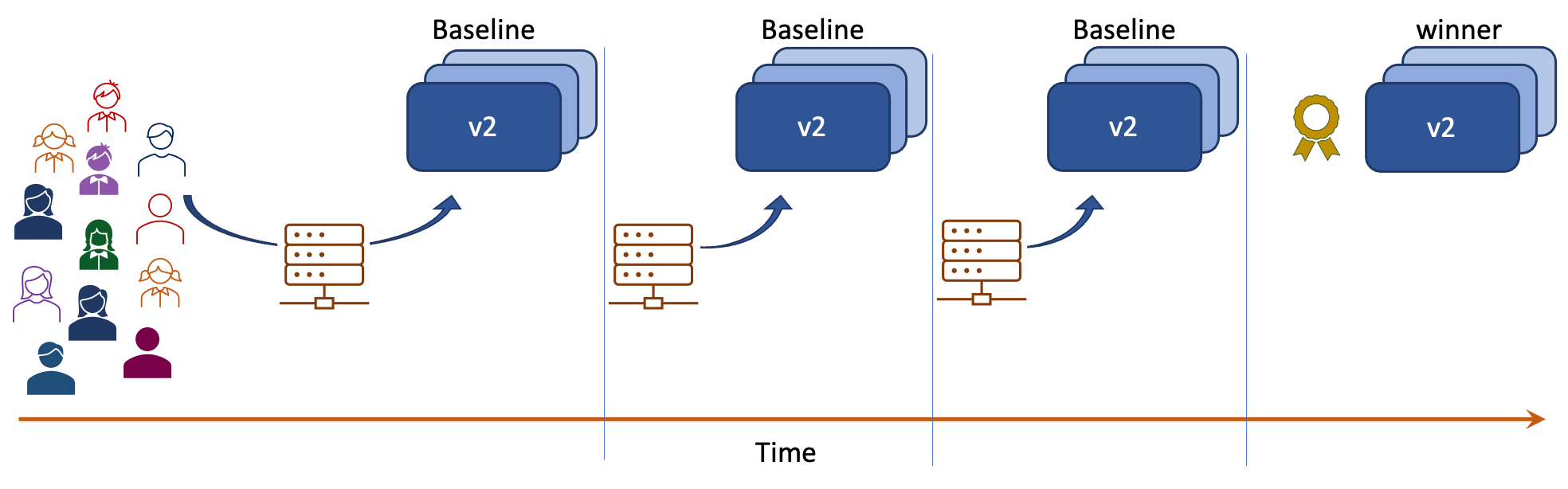

Simple rollout & rollout¶

This pattern is modeled after the rolling update of a Kubernetes deployment.

- After

v2is deployed, it replacesv1. - If

v2is the winner of the experiment, it is retained. - Else,

v2is rolled back andv1is retained.

All traffic flows to v2 during the experiment.

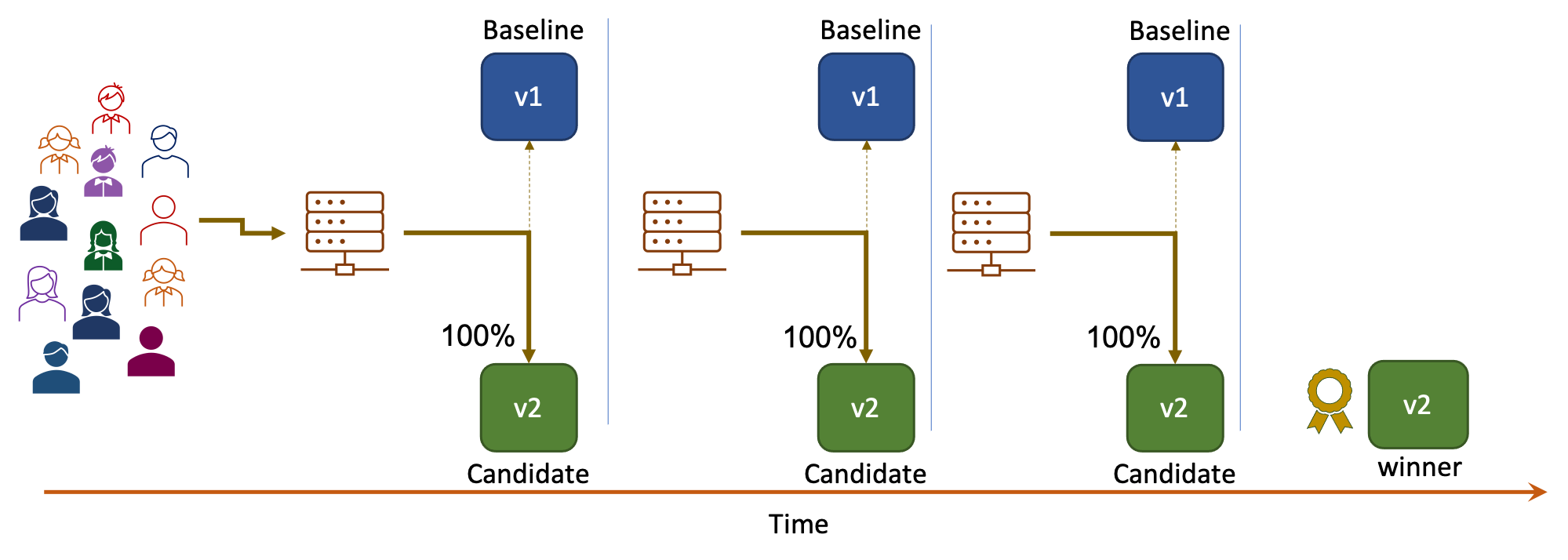

BlueGreen¶

- After

v2is deployed, bothv1andv2are available. - All traffic is routed to

v2. - If

v2is the winner of the experiment, all traffic continues to flow tov2. - Else, all traffic is routed back to

v1.

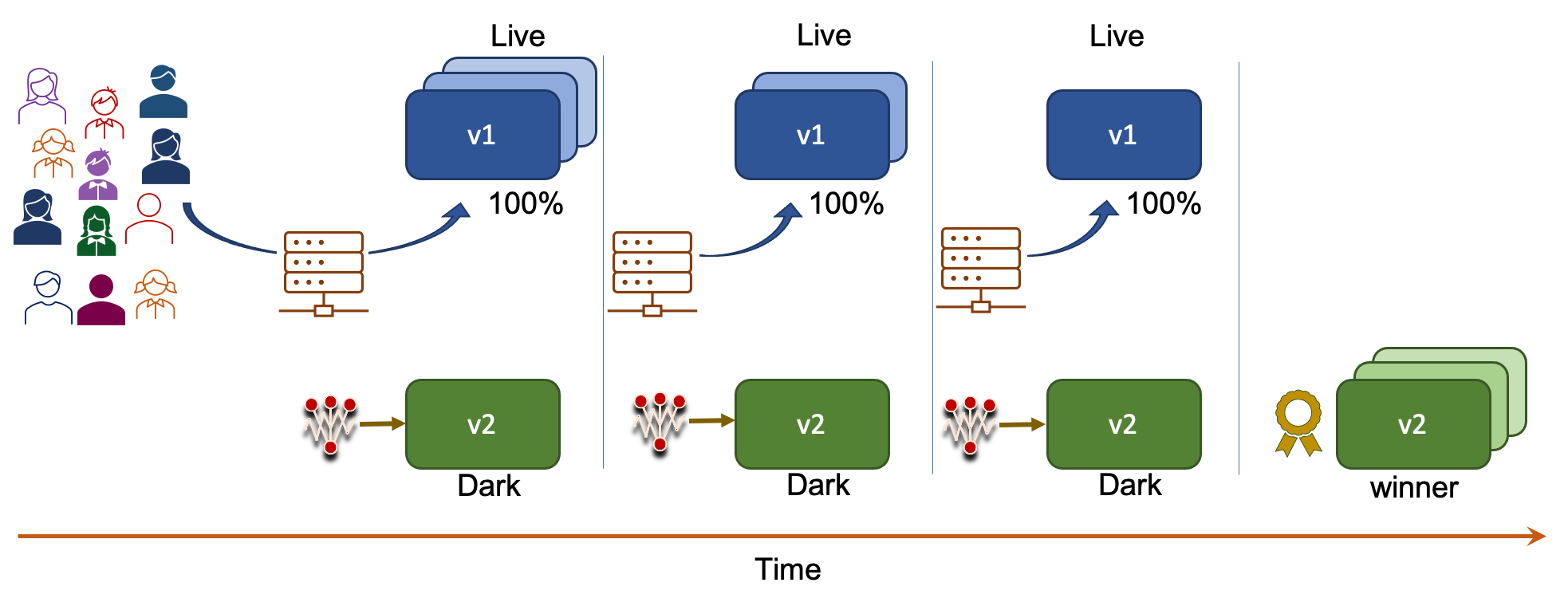

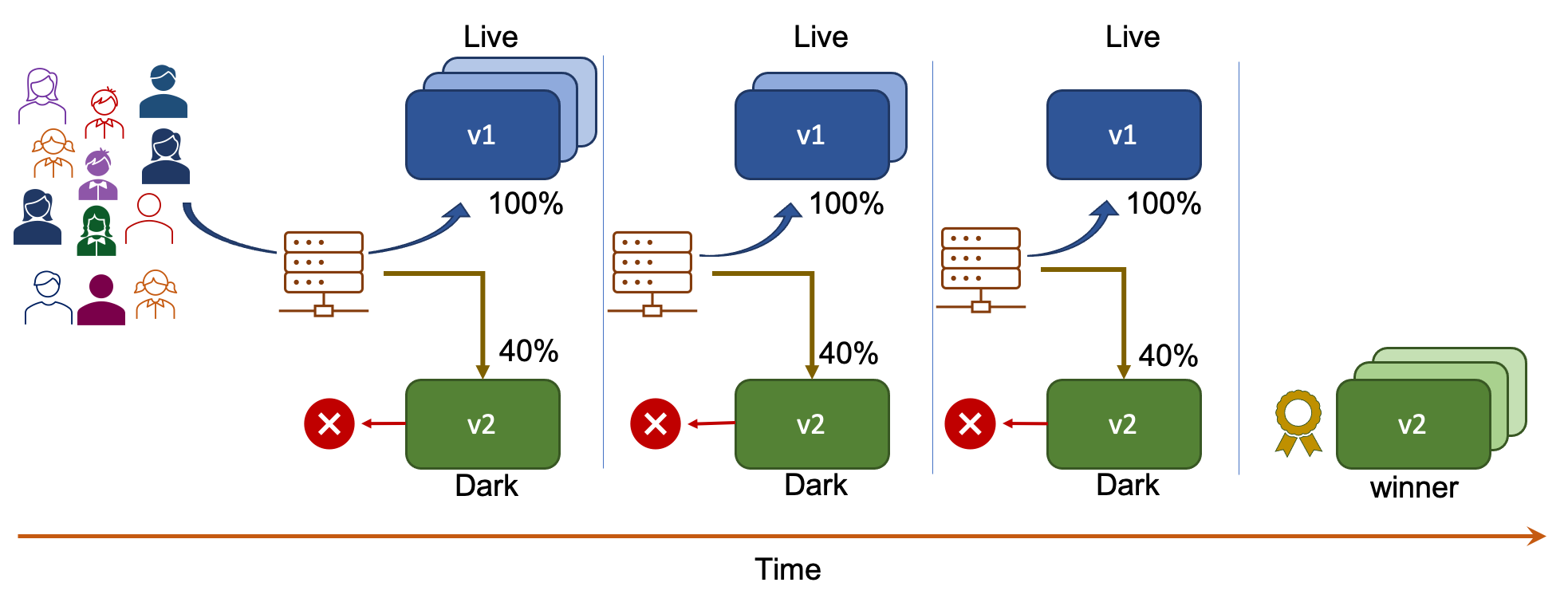

Dark launch¶

- After

v2is deployed, it is hidden from end-users. v2is not used to serve end-user requests but can still be experimented with.

Built-in load/metrics¶

During the experiment, Iter8 generates load for v2 and collects built-in metrics.

Traffic mirroring (shadowing)¶

Mirrored traffic is a replica of the real user requests1 that is routed to v2, and used to collect metrics for v2.

Canary¶

Canary deployment involves exposing v2 to a small fraction of end-user requests during the experiment before exposing it to a larger fraction of requests or all the requests.

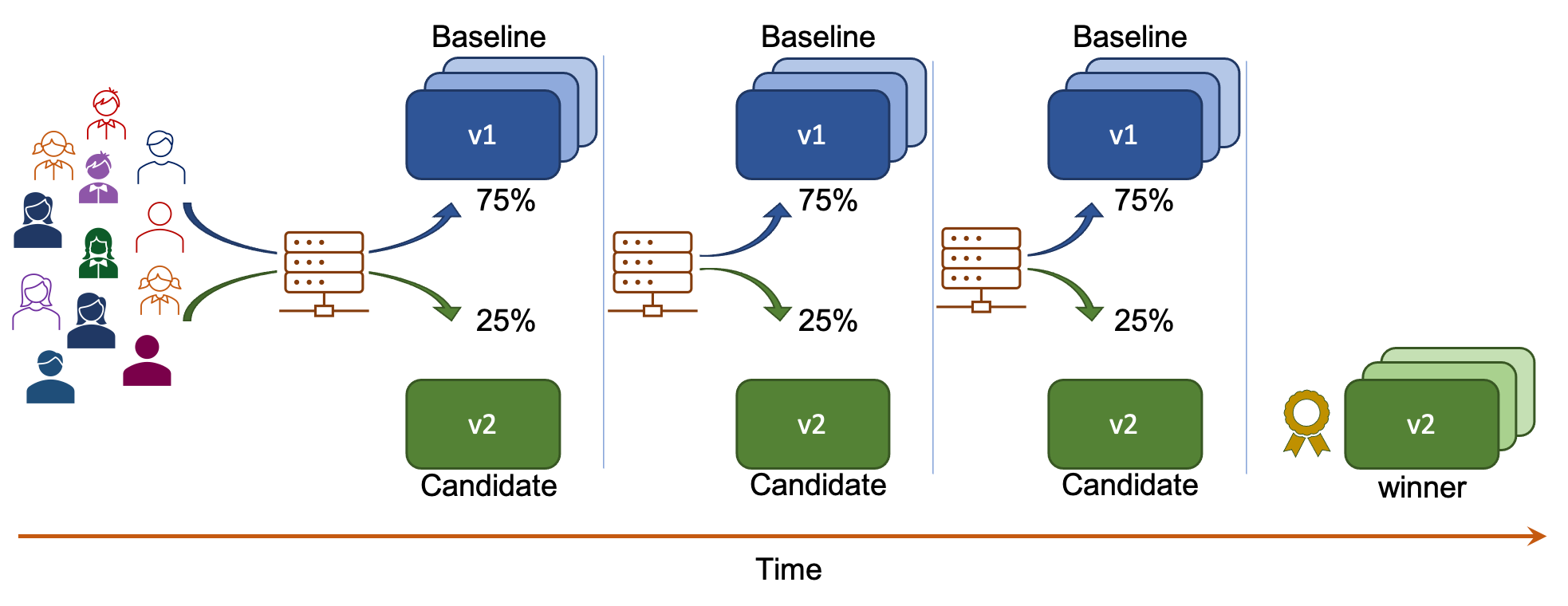

Fixed-%-split¶

A fixed % of end-user requests is sent to v2 and the rest is sent to v1.

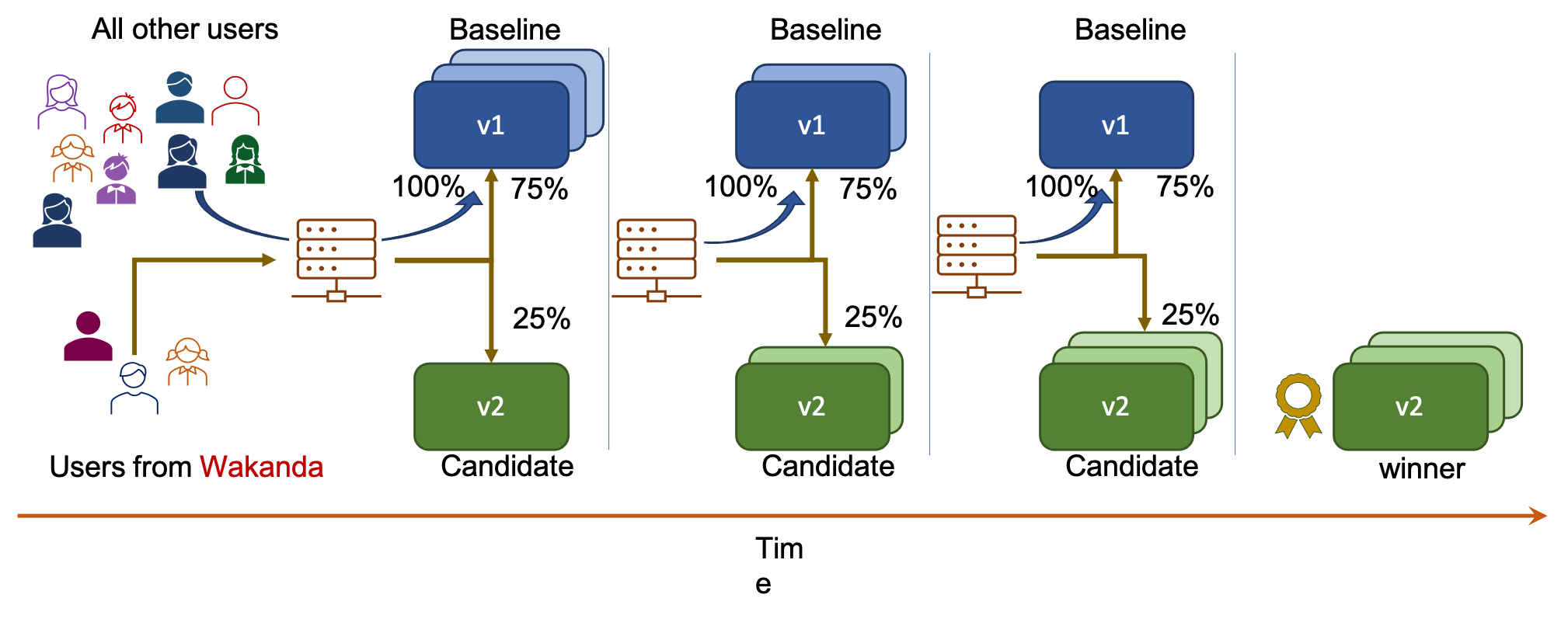

Fixed-%-split with user segmentation¶

- Only a specific segment of the users participate in the experiment.

- A fixed % of requests from the participating segment is sent to

v2. Rest is sent tov1. - All requests from end-users in the non-participating segment is sent to

v1.

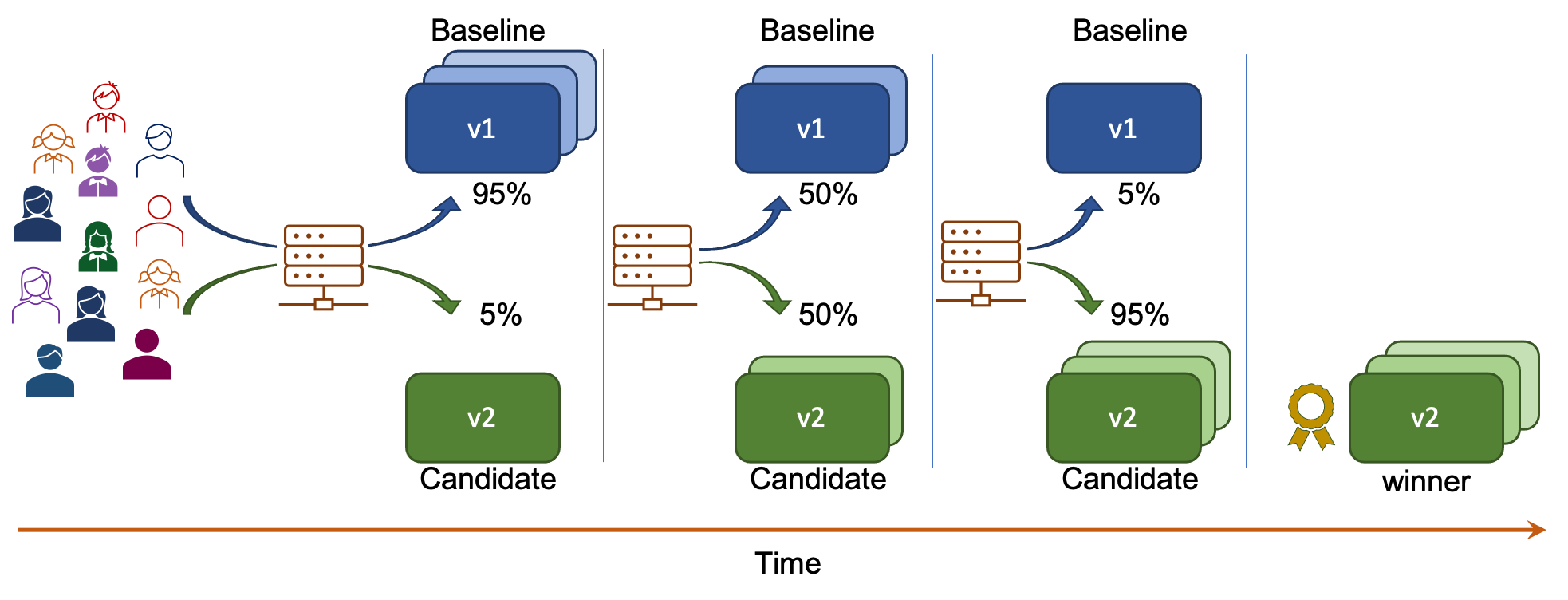

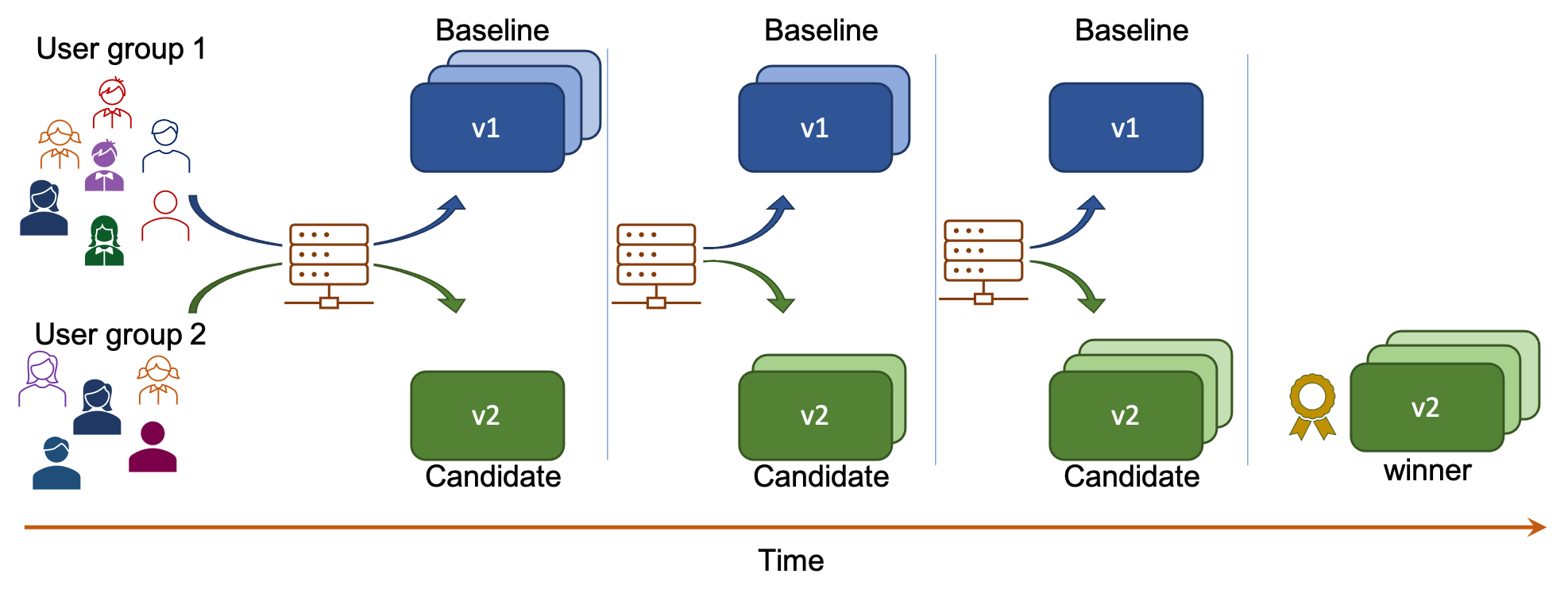

Progressive traffic shift¶

Traffic is incrementally shifted to the winner over multiple iterations.

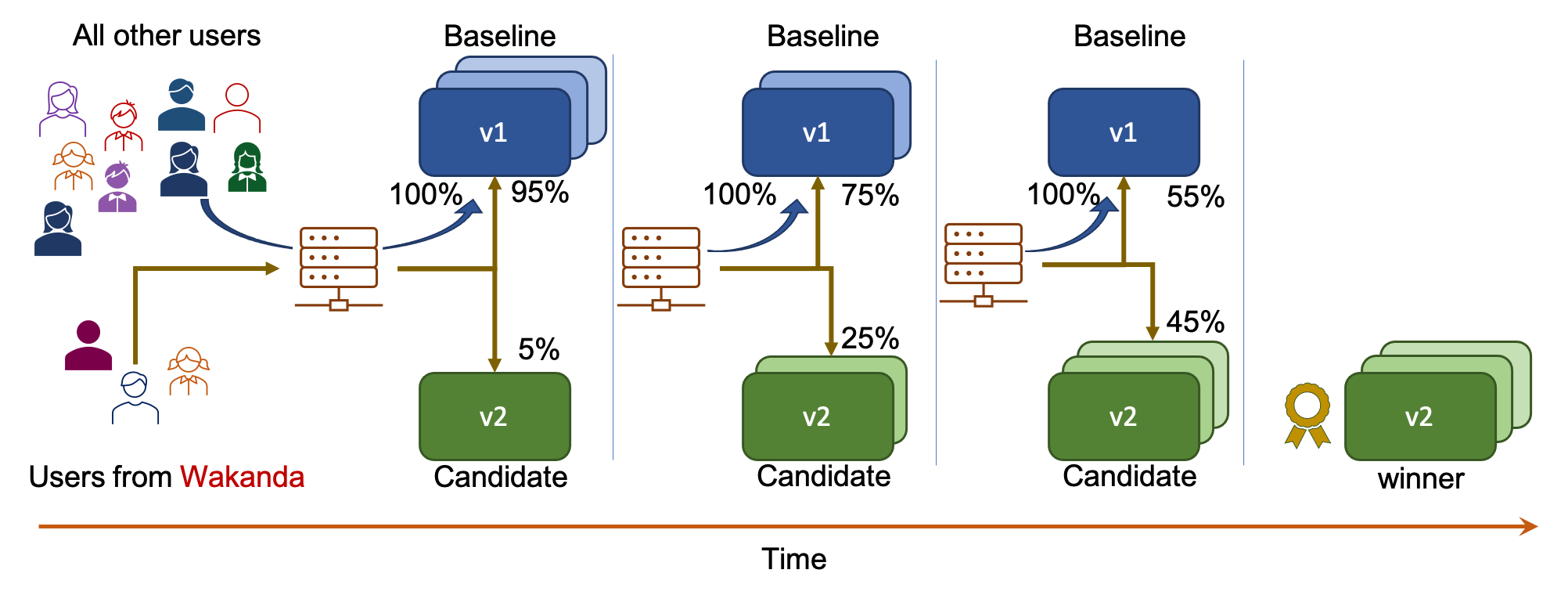

Progressive traffic shift with user segmentation¶

- Only a specific segment of the users participate in the experiment.

- Within this segment, traffic is incrementally shifted to the winner over multiple iterations.

- All requests from end-users in the non-participating segment is sent to

v1.

Session affinity¶

Session affinity, sometimes referred to as sticky sessions, routes all requests coming from an end-user to the same version consistently throughout the experiment.

User grouping for affinity can be configured based on a number of different attributes of the request including request headers, cookies, query parameters, geo location, user agent (browser version, screen size, operating system) and language.

Version promotion¶

Iter8 can automatically promote the winning version at the end of an experiment.

-

It is possible to mirror only a certain percentage of the requests instead of all requests. ↩